Introduction

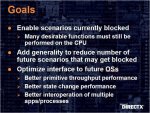

While the next major revision for DirectX is not expected until Longhorn’s launch, Microsoft’s DirectX group has been briefing developers on what’s in store for “DirectX Next†with presentations at Microsoft Meltdown and other developer conferences. Recently, this presentation was made available to the public via Microsoft’s Developer Network. The intent of this article is to give a more thorough treatment of the features listed for inclusion with DirectX Next and hence explore the types of capabilities that DirectX Next may be offering.

While the first 7 revisions for DirectX were met with mostly evolutionary enhancements and additions focused on very specific graphics features, such as environment and bump mapping, DirectX8 broke the trend and introduced a number of new, general-purpose systems. Most notably being the programmable pipeline, where vertex and pixel shading was no longer controlled by simply tweaking a few parameters, or toggling specific features on and off. Instead, with DirectX8 you were given a set number of inputs and outputs and were pretty much allowed to go nuts in between and do whatever you wished, as long as it was within the hardware’s resources – at least, this is how vertex shading worked. Pixel shading, on the other hand, was extremely limited, and really not even all that programmable. You could do a handful of vector operations on a handful of inputs (via vertex shader outputs, pixel shader constants, and textures) with one output, the frame buffer, but that’s about it. DirectX8.1 provided some aide in this respect, but it wasn’t until DirectX9 that everything really started to come into place.

Unified Shader Model

DirectX9 introduced versions 2.0, 2.x, and 3.0 of the pixel and vertex shader programming models. 2.0 was intended as the minimum specification that all DirectX9 graphics processors had to be able to handle, 2.x existed for more advanced processors that could do a bit more than the specification called for, and 3.0 was intended for an entire new generation of products (which should be available sometime in 2004). Pixel Shader 2.0 contained at least six times as many general purpose registers as were in the DirectX8 models and squared the number of available instruction slots, bringing pixel shader functionality to almost the same level as vertex shaders were in DirectX8. At the same time there has been an increasing need for texture lookups in the vertex shaders, especially for applications wishing to make use of general-purpose displacement mapping. Consequently, with version 3.0 the pixel and vertex shading models have very similar capabilities, so much so in fact that having two separate hardware units for vertex and pixel shading is becoming largely redundant. As a result, all 4.0 shader models are identical in both syntax and feature set, allowing hardware to combine the different hardware units together into a single pool. This has the added benefit that any increase in shading power increases both vertex and pixel shading performance.

Unifying the shader models is great – there are several instances where you want to do things at the vertex level that’s mostly a pixel level operation, or something at the pixel level that’s usually done at the vertex level – but what about the instruction limits on these shaders, or resource limits all together? Wouldn’t it be great if you could create as many textures as you wanted, and write shaders of any length you wanted without ever running into those nasty hard-locked limits? Sounds like wishful thinking, but it turns out that it’s not really that far off – there’s just one little problem with current graphics processors’ memory systems...