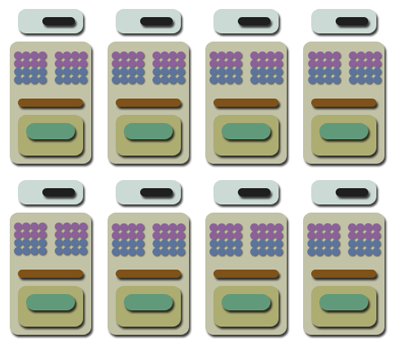

At the core of G80 is a homogeneous collection of floating point processors, be they for math ops or data 'address' (it's way more than just calculation of a memory address in reality) and fetch. Each math processor is a scalar (1-channel) ALU with exposed FP32 precision (full time, no partial precision calculations are possible), with rounding properties that conform to IEEE754 standards and that can theoretically (supposedly) dual-issue a MADD and MUL instruction in one cycle. More on that later. There are 128 such processors (called SPs by NVIDIA) in a full G80, grouped in clusters of 16, giving the outward appearance of an 8-way MIMD setup of 16-way SIMD SP clusters. Inwardly, each 16 SP cluster is further organised in two pairs of 8 (let's call that 8x2) and the scheduler will effectively run the same instruction on each half cluster across a number of cycles, depending on thread type.

Each cluster, after having access to its own dedicated register pool, also has access to the global register file, global constant cache and global texture cache (both of those read-only). More on those on-chip memories later.

Each cluster can process threads of three different types (vertex, geometry, pixel), independently and in the same clock cycle (and infact it's likely more granular than that), and that's the crux of how the shading core works. With a scheduler per cluster and processing of all thread types, you have yourself a unified shading architecture, no clusters specifically tied to vertex, geometry or pixel data for the duration of the chip's operation, and the ability to perform the necessary work whenever it's needed. That almost self-scheduling, dynamically balanced, independant shading is what defines G80 from a data processing perspective. That means that in any given cycle, G80's SPs may be working on the same thread type, but conversely and as a function of the thread schedulers running each cluster, working on vertex, geometry and pixel data in the same cycle.

The threaded nature extends to data fetch and filtering too, the chip running fetch threads asynchronously from threads running on the clusters, allowing the hardware to hide fetch and filter latency as much as possible, very much in order to keep the chip working as close to maximum efficiency as possible. Each half pair 'owns' a set of fetch and filter units, as set out by the overview diagram. Hopefully by now the fully scalar and superthreaded (horrible word, but apt) nature of G80 is becoming clear. The chip is a radical departure from G7x where that architecture was fixed by thread type (8 vertex processors, 6 quads of fragment processors), coupling data fetch to the processor ALUs so you could frequently stall the ALU waiting for data to become available. The old ALUs were also fixed-width vector processors, 5 or 4D wide with somewhat limited co- and dual-issue opportunity.

G80 sports none of those architectural traits.

Threads can jump on and off the SPs for free, cycle-by-cycle, they are dynamically allocated to do whatever work is currently needed, and since the chip is entirely scalar you get auto-vectorisation (effectively where you're trying to fill up unused channels in the ALU that might have sat idle) for free, the goal being to keep as much of what amounts to 128 slots doing work as possible. You might see folks attempting to divide the chip up nice and neatly in order to compare it to previous chips gone by, but we've already hinted how disengenious that is and it does a disservice to the way the architecture works.

We talked about the dedicated register pool (the hardware is optimised for two or three FP32 temporary registers per object), and access to the global register file, constant cache and texture cache, and there's also data sampling ability by means of data address and fetch units. We mentioned that data fetch is threaded asynchronously to the shader core, which is true, but fetch and address is still tied to a cluster such that threads on those data samplers can only feed the cluster they're tied to, at least initially. Clusters can pass data to each other, but in a read-only fashion via L2, or not without a memory traversal penalty to DRAM of some kind. And without a public way for us to program the chip to test the intra-cluster and much more limited cross-cluster communication, it's difficult to show that explicity for the time being.

Each cluster also has 4 pixels per clock of address ability, but 8 INT8 (FP16 half speed) bilerps per clock of fetch and filtering, in order to get data out of VRAM or cache into the core (for any thread type of course) for working on. Therefore the hardware will give you two bilerps per pixel address, for 'free' (and proven with in-house codes up to and including FP32 per channel), rather than the usual one. So that's 32 pixels per clock (ppc) of INT8 bilinear 2xAF, or 32ppc of FP16 bilinear, or 16ppc of FP16 2xAF, or 16ppc of FP32 bilinear per cycle, out of the combined texture hardware across the chip, giving you a few rates to ponder as we talk about the rest. We'll get back to fetch and filter performance later in the article.

Special function ops (sin, cos, rcp, log, pow, etc) all seem to take 4 cycles (4 1-cycle loops, we bet) to execute and retire, performed outside of what you'd reasonably call the 'main' shading ALUs for the first time in a programmable NVIDIA graphics processor. Special function processing consumes available attribute interpolation horsepower, given the shared logic for processing in each case, NVIDIA seemingly happy to make the tradeoff between special function processing and interpolation rates. We covered that in a bit of detail previous, back on page 6. Each cluster then feeds into a level 2 cache (probably 128KiB in size) and then data is either sent back round for further processing, stored off somewhere in an intermediary surface or sent to the ROP for final pixel processing, depending on the application.

That's effectively the shader core in a (fairly big) nutshell, making the sampler hardware next on our topic list.