Video Decoding Test

Note: The first published version of this article detailed decoding performance results that were a result of software and driver issues on our Vista x64-based test platform. Investigation by AMD and ourselves followed and we got to the bottom of why UVD wasn't being activated across the board on the Radeon product stack we were testing. To cut a long story short, we swapped to Vista x86 and took the software preparation precautions required to get things up and running on our test box.

UVD isn't currently supported in Vista x64 except under some unsupported conditions, which is a shame, and under certain conditions it can be disabled on Vista x86. We happened to trigger those conditions because of the way we test. It highlights the need for software that matches the hardware stack in terms of quality so that you don't have the same crappy experience testing and enjoying your purchase in the way we did.

Note that because our display device is the Dell 3007 30" LCD monitor, which supports dual-link DVI with HDCP, any device that can't protect both links when driving the panel at native 2560x1600 will have to drop down to a pixel-doubled 1280x800 to view protected content that required HDCP. The 8800 GTX is the device that fails this test out of the boards we're looking at, so it has to display the native 1080p video at 720p, and the quality is crap.

When you see 1080p native on our graphs, that's running with a 2560x1600 desktop resolution in Vista, and the player window sized to 1920x1080 (1080p) in the centre of that desktop. We use an in-house software tool to force centered of the PowerDVD window to keep things consistent. When you see 1600p on the graphs, that's the player running full-screen and scaled to the native 2560x1600 of the panel. We test at that resolution to see if we hit up on decode bandwidth limitations, or scaler limitations or what have you. The results might be a little surprising.

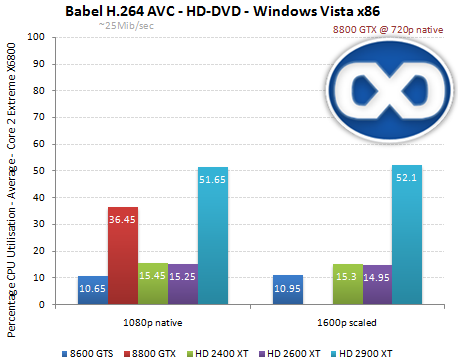

Babel - H.264 AVC - ~25Mib/sec - 102s

We present the results a bit differently this time, collating the CPU util graphs generated by our Boobz software tool (don't ask the names of some of our other in-house binaries, at least not when anyone's listening when we tell you), but the graphs are still available at the bottom of the page if you want to peek at how Vista and the driver will work the CPU cores depending on the product being tested. Most of the graph patterns are reproducable, too.

The three products on test with next-generation video decoding hardware, GeForce 8600 GTS with NVIDIA's VP2 and HS 2600 XT and HD 2400 XT with AMD's UVD, all show significant decreases in CPU utilisation due to the use of those decoder blocks. HD 2400 XT enjoys the same performance as HD 2600XT, even when asked to decode 1080p AVC and scale to a 4 megapixel presentation. Note that when PowerDVD goes fullscreen the Vista DWM doesn't stop compositing, so the GPU is further used behind the scenes while the AVC decode is happening.

Radeon HD 2900 XT is the least performant hardware on test, although bear in mind that the GeForce 8800 GTX is only presenting to a 1280x800 desktop wheres the HD 2900 XT is drawing a full 4 megapixel desktop at the same time as its video decode efforts.

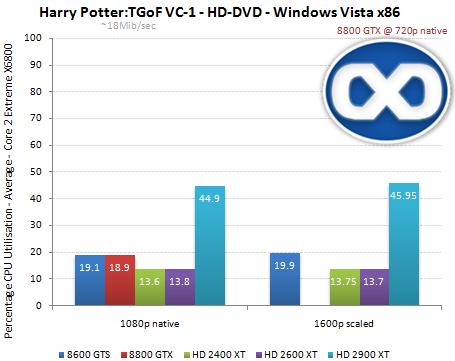

Harry Potter and The Goblet of Fire - VC-1 - ~18Mib/sec - 100s

It sems that G80's VC-1 decoding performance is very decent, approaching that of G84's, however neither match the performance put in by AMD's RV6xx parts. Interestingly, the difference between percentage utilisation in this VC-1 test isn't much less than the H.264 test, hinting at common blocks being used in hardware at similar levels of efficiency, whereas NVIDIA's approaches for the two CODECs are different, as we know. VVP2 doesn't do entropy decode for VC-1, so please note G84 doing better with H.264 than it does with VC-1, as expected. R600 struggles with VC-1 at high bitrate, but performance is better than with Babel, as you'd expect (the CODEC is easier to decode and bitrate is significantly less).

Notes

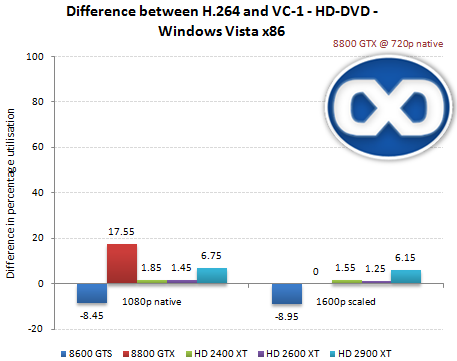

That summary graph just shows you what we've explained above: VP2 is better at H.264 than VC-1, RV6xx performs similarly with both, and both G80 and R600 prefer VC-1, but to similar extents.

The thing to note is that when you count the number of hi-def releases that use VC-1 or H.264 AVC, both CODECs supported by the HD-DVD and Blu-Ray formats in official capacities, VC-1 is by far the most dominant. NVIDIA will argue that VC-1's easy enough to decode that they don't need VP2 to handle the entropy stage for VC-1, which presumably lets them save gates (and thus area, but we're speculating). Contrast that to AMD who built UVD with clear goals of battery life in mobile situations in mind, and thus didn't want to take chances with either CODEC, no matter how easy it is to decode in software.

Their desktop parts enjoy those same benefits of agnostic decode performance, however NVIDIA have a stronger solution if you don't care too much about power draw and your CPU is on the borderline when it comes to assisting these chips with their H.264 AVC decoding endeavours (remember it's the harder of the two to do, and neither offload the process a full 100%).

A further goal for us in our testing of RV6xx going forward will be to decide the lower bound of CPU you need to pair with the parts, in order to provide a comfortable decode experience for HD content in a media center setting. We'll get that done when resources permit. So UVD does work, just not officially in x64 which is a shame. Support for that comes somewhere down the line. Apologies again to our readers (and those at HEXUS, since we did the UVD testing for them!), and to AMD for helping diagnose.

CPU Usage Graphs from Boobz

- 8800 GTX - Babel - 720p

- 8800 GTX - Harry Potter - 720p

- 8600 GTX - Babel - 1080p

- 8600 GTX - Babel - 1600p Upscale

- 8600 GTX - Harry Potter - 1080p

- 8600 GTX - Harry Potter - 1600p Upscale

- HD 2400 XT - Babel - 1080p

- HD 2400 XT - Babel - 1600p Upscale

- HD 2400 XT - Harry Potter - 1080p

- HD 2400 XT - Harry Potter - 1600p Upscale

- HD 2600 XT - Babel - 1080p

- HD 2600 XT - Babel - 1600p Upscale

- HD 2600 XT - Harry Potter - 1080p

- HD 2600 XT - Harry Potter - 1600p Upscale

- HD 2900 XT - Babel - 1080p

- HD 2900 XT - Babel - 1600p Upscale

- HD 2900 XT - Harry Potter - 1080p

- HD 2900 XT - Harry Potter - 1600p Upscale