Detecting Discontinuities

The simplest and most common option for detecting discontinuities is just examining the final rendered colour buffer. If the difference in colour between two adjacent pixels (their distance) is larger than some threshold value, then there is a discontinuity, otherwise not. These distance metrics are often computed in a colour space that models human vision better than RGB, such as HSL. Figure 9 shows an example of a rendered image, and horizontal and vertical discontinuities calculated from it.

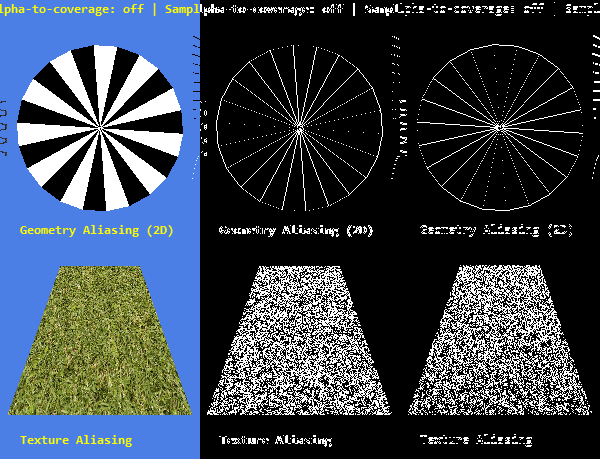

Detecting discontinuities in a color buffer. Left: image with no AA. Center: horizontal discontinuities. Right: Vertical discontinuities.

To speed up the process of discontinuity detection, or reduce the number of false positives (e.g. within the texture or around the text in Figure 9), other buffers generated during rendering may be used. For both forward and backward renderers, the Z-buffer (depth buffer) is usually available. It stores a depth value for each pixel, and can be used to detect edges. However, this only works to detect silhouette edges, that is edges on the outside of a 3D object. To also cover in-object edges, another buffer needs to be used instead of or in addition to the Z-buffer. For deferred renderers, a buffer storing the surface normal direction for each pixel is often generated – in this case, the angle between adjacent normals is a suitable metric for detecting edges.