Tesla isn't going to cause a major paradigm shift in every market overnight. It will, however, cause those shifts in individual industries as software is writen to take advantage of CUDA. We had the chance to speak to a number of groups who have been working with CUDA over the past year to see what they've been able to do with it, and the results are impressive.

Headwave

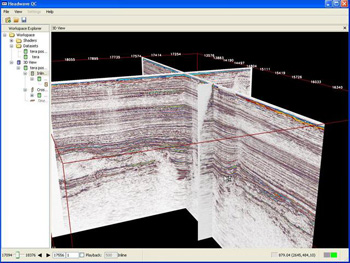

One of the major markets that NVIDIA is targeting with Tesla is the oil and gas industry, and it's easy to see why. Most oil fields that have not yet been discovered are offshore, and as a result, the need for data processing capabilities has grown at an astronomical rate. Seismic data is being acquired at much higher resolutions due to the expense of attempting to tap an undersea oil field--about $150 million--along with new types of data, such as electromagnetics, that weren't even considered a decade ago.

These processed data sets are often in the range of 50 gigabytes in size, down from between half a terabyte and 2.5 terabytes before processing. Headwave is entering the seismic data processing market with a product that uses CUDA to great effect. Traditional algorithms run on a workstation can process a data set at 10-30 MB/s. For a terabyte data set, that's over three weeks of processing time at least. With massive data sets and an inherently parallelizable algorithm, Headwave is able to achieve speeds of 1-2 GB/s. That same terabyte data set can now be processed in less than a day on a single G80.

Evolved Machines

Most people have heard of neural networks in artificial intelligence, but many people don't realize that artificial neural networks as described in the literature have absolutely nothing to do with biological neurons. Artificial neural networks essentially abstract away all of the physical aspects of the neuron in exchange for a greatly simplified model that seems to have reached its limits in terms of usefulness. Obviously, those physical aspects of the neuron are essential for more general purpose computation.

Dr. Paul Rhodes and his team at Evolved Machines are creating neural arrays, which are complete simulations of neural circuits. Because they take into account all of the physical characters of the neuron, they are infinitely more complicated than the neural networks of old. A single neuron contains 2000 differential equations, each of which is updated 100,000 times per second. Each update takes 20 Flops. So, for a single neuron, we're already at 4 GFlops. Dr. Rhodes estimates that a neural circuit capable of performing sensory perception would be composed of 1000 to 2500 neurons, which takes us to between 4 and 10 TFlops. Until very recently, this was the type of application that would take a Top 500 supercomputing cluster, but it's suddenly possible with a rack or two.

Sensory perception is exactly what Evolved Machines is trying to achieve. They're building neural circuits that are capable of visual as well as olfactory recognition--yes, that's right, computers that smell. Already, they've found that their application is about 65 times faster on a single G80 than it is on a current x86 chip.

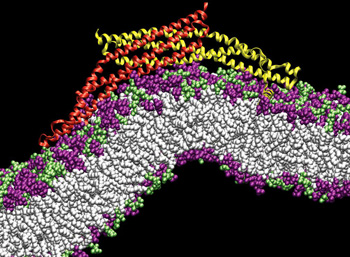

Theoretical and Computational Biophysics Group at UIUC

Evolved Machines weren't the only group demonstrating simulations of molecular biology. John Stone, a developer with the Theoretical and Computational Biophysics Group at the University of Illinois at Urbana-Champaign, was on hand to share his experiences with CUDA development. Stone has added CUDA support to the Nanoscale Molecular Dynamics package (NAMD). As with Headwave and Evolved Machine, parts of NAMD were sped up by orders of magnitude thanks to CUDA.

Stone has documented much of his experiences with CUDA in a lecture given to the ECE 498AL class at UIUC (more on that class later), and it's definitely worth a read to anyone considering using CUDA. Stone focuses on the algorithm that places ions in a simulated virus, and he points out a number of potential bottlenecks that are applicable to any GPU computing project. The number of arithmetic operations must be high enough to effectively hide memory latency, data structures must be modified to prevent branching, different memory regions must be used effectively, register usage has to be kept as low as possible... basically, it's all the rules that we've come to expect when writing 3D code on a GPU.

Of course, this isn't completely straightforward. Stone provides four implementations of the Coloumbic potential kernel. The naive version that doesn't make any attempt to hide memory latency achieves 90 GFlops. The final version, which follows all the guidelines above and maximizes use of the parallel data cache, reaches 235 GFlops. Of course, the resulting code is significantly more daunting than naive version, although once you've got a decent handle on CUDA, the code itself is really not that bad. However, it's very clear that the algorithm was carefully designed, implemented, profiled, and reimplemented several times, which is where the difficulty with CUDA arises.