All characters in UE3 are capable of casting dynamic soft shadows, both for self-shadowing and environment shadowing, and the performance of this is quite reasonable on today's high-end DirectX9 hardware -- which will be in very mainstream consumer PC's by mid-2005. We won't be publicly disclosing implementation details until the first UE3 games ship, however.

With regards to "virtual displacement mapping" or what is known as offset-mapping, other than the normally expected nice bumpy edges of corner walls, will this software technology be used for other things like, maybe, bullet-holes on walls or enemies? Currently, what is virtual displacement mapping utilized for in UE3?

We're using virtual displacement mapping on surfaces where large-scale tessellation is too costly to be practical as a way of increasing surface detail. Mostly, this means world geometry -- walls, floors, and other surfaces that tend to cover large areas with thousands of polygons, which would balloon up to millions of polygons with real displacement mapping. On the other hand, our in-game characters have enough polygons that we can build sufficient detail into the actual geometry that virtual displacement mapping is unnecessary. You would need to view a character's polygons from centimeters away to see the parallax

You had informed us, during the demo of UE3 in NVIDIA's GeForce 6 launch event, that in-game models consists of approximately 6000-7000 polygons but that this is actually "scaled down" from an original size of millions of polygons. How is "scaling down" achieved? Is there a tool for this or would artists have to create their own low-polygon cage?

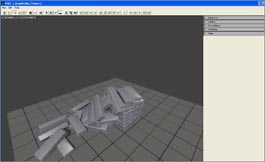

We provide a preprocessing tool with Unreal Engine 3 that analyzes the two meshes and generates normal maps based on the differences. This can be very CPU-intensive, so it can run as a distributed-computing application, taking advantage of the whole team's idle CPU cycles to generate the data.

Are there different and separate lighting systems for the indoors and outdoors world of UE3? Is there usage of a kind of global illumination system or is it just normal mapping ala Half Life 2?

| QUOTE on the next-generation UnrealEngine3 "We're building an engine to be suitable for small teams and very large teams alike..." Tim Sweeney |

The lighting and shadowing pipeline is 100% uniform -- the same algorithms are supported indoor and outdoor. Various kinds of lights (omni, directional, spot) are provided for achieving different kinds of artistic effects.

All lighting is supported 100% dynamically. There is no static global illumination pass generating lightmaps, because those techniques don't scale well to per-pixel shadowed diffuse and specular lighting.

Can you tell us how you would be making the editor for UnrealEngine3 (let's call it UnrealEd3) easier for artists when it comes to writing shaders or level development?

In UE2, the editor was based on two primary editing components: The realtime 3D viewports and the script-driven hierarchical property editor. UE2 adds two more fundamental tools: A visual component editor and a timeline-based cinematic editor. The visual component editor powers the visual shader editor, sound editor, and gameplay scripting framework.

In UE3, shaders are created by artists in a visual environment, by hooking together shader components. Programmers can supply and extend shader components, which take a set of inputs (from textures or other shader components), perform some processing work, and output colors or texture coordinates.

A huge focus in UE3 has been empowering artists to do things which previously required programmer intervention: creating complex shaders, scripting gameplay scenarios, and setting up complex cinematics. We're building an engine to be suitable for small teams and very large teams alike, and in the past, programmers have been a bottleneck in both situations. The more power we put in the hands of content creators, the less custom programming work is required.

Will UnrealEngine3 be taking advantage of PCI-E and use the GPU/VPU for physics calculations?

All DirectX9 applications will automatically take advantage of PCI-Express as soon as it's available.

We're using the GPU for visual effects processing, which includes both the obvious task of rendering visible pixels, and the invisible supporting work of, for example, generating shadow buffers and performing image convolution.

However, given the limitations of the DirectX9 and HLSL programming model, I don't take seriously the idea of running non-visual processes like physics or sound on the GPU in the DirectX9 timeframe. For serious programming work, you need modern data structure features such as data pointers, linked lists, function pointers, random access memory reads and writes, and so on.

GPU's will inevitably evolve to the point where you can simply compile C code to them. At that point a GPU would be suitable for any high-performance parallel computing task.