I later had the opportunity to chat with Dan over the issue of the length of time it takes games to come out that make use of new features relative to the time they are actually introduced. It can often be two or three generations of hardware before developers begin to make serious use of certain features. I suggested to him that perhaps some way might be found to supply the developers with hardware capable of these features before the actual hardware is available to consumers; one possible method I suggested was to supply hardware on currently available silicon but running at lower speeds, so that developers can use the features, and if reasonable projections are given to the developers as to the speed the final hardware then perhaps they could target performance levels given what the test hardware runs at. Dan said that nVIDIA have had numerous discussions with developers along these lines, but it’s really too costly for them to do. I also asked if this would help iron out hardware bugs before going to the final silicon process; Dan, however, explained that the bugs are actually removed via the emulation tools they use, and most chip bugs will occur during the transistor layout. Thus a revision on one silicon process and then later moving to a smaller silicon process will result in doing the transistor layout twice, and hence increasing the odds of introducing more bugs into the chips.

However, Dan said that by the time we see the next generation of hardware we’ll be moving into a whole new realm of programmability. Future versions of current API’s will more be able to abstract the hardware for the developer, who won’t be limited as much by what the chip can do as by their imagination of how they can program these devices. It is possible that we could eventually go back into the area of emulation for developers, with IHV’s giving the developers software emulation tools of new graphics capabilities so that they may educate themselves on how to use them. A possible downside to this future, graphics development utopia is that such hardware may run slower compared to today’s chips, but once the hardware is actually available developers should already have a pretty good understanding of how it operates and hopefully have a much quicker turnaround for implementation within their game code. The question remains, how many developers will actually make use of this? Sure there will be some developers who are keen to use the latest and the best, however most developers will stick to the lowest common denominator, so in all likelihood most games are still going to be limited by the specifications of the hardware most prevalent in peoples PC’s.

As for now, numerous nVIDIA board vendors have already announced products based on the new GeForce4 lineup, and consumers will see variations from the known specifications. I asked what nVIDIA’s thoughts were on this and basically they will guarantee the chips at the speeds specified, but if a vendor chooses to sell them clocked faster that’s also fine with nVIDIA—just so long as the vendor honors their warranty.

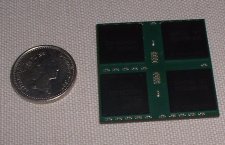

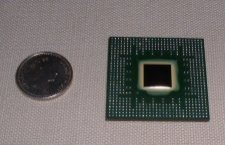

The workstation products based on the GeForce4 series are due to be announced soon, possibly in a matter of weeks. There may be a few surprise configurations with these products as I have been quietly informed that both GeForce4 variants are scalable, in a fashion similar to the SLI technology used by 3dfx’s VSA-100 chip. If true, GF4-based workstation boards should be able to scale beyond just two chips. nVIDIA was purportedly already looking at their own solutions to multi-chip scalability when the company purchased 3dfx. And it is quite likely that nVIDIA’s solution will answer a few of the issues present in 3dfx’s prior solutions, such as an even distribution of geometry processing between the chips.

Well, there we have it, nVIDIA’s entire 3D hardware for all of the next six months! As ever they seem fairly confident over its prospects, and right now its difficult to see any reason why they shouldn’t be. Unfortunatly we were not able to get any of our technical questions answered at the launch, however I have sent nVIDIA and email with detailed technical questions; if I get a response I’ll do a further follow-up with nVIDIA’s answers.