Unlimited Resources

Virtual Video Memory also aids the resource problem in a number of ways. Firstly, AGP/system memory becomes a much more viable storage space than it was in the past due to overall better bandwidth and memory utilization. Secondly, since virtual memory works on a logical address space, the user of the graphics processor is free to consume as much memory as they want as long as it is within bounds of the virtual address space. It’s up to the implementation to efficiently map virtual memory to the physical constraints of the user’s computer. Applications consuming inane amounts of memory might not achieve the most optimal frame rates, but they will at least work. Virtual address spaces need not be small, either – 3Dlabs' Wildcat VP uses virtual video memory and has a virtual address space of 16GB. Clearly, resources cease to be all that limited when you can consume 16GB of them.When virtual memory is applied to shaders, things get a bit interesting. One problem with trying to use the old memory systems with shaders whose lengths are unbounded is that the traditional memory system doesn’t necessarily see shaders as a collection of instructions but rather as an abstract block of data that may or may not fit into the graphics processor’s instruction slots. Once the shader is loaded in, it’s executed on every vertex or pixel that follows until the shader is unloaded. Using this line of thinking, it’d seem the only way to achieve longer shaders would be to either continuously increase the number of instruction slots on graphics processors, or use some form of automatic multi-passing that would break down shaders into manageable chunks. There are obviously limitations to how many instruction slots that can be fit onto a graphics processor, so the first option is out. The second option does work, and can do so quite well with a proper implementation, but it turns out that the proper implementation is actually the same as what would be done in a virtual memory system.

A better solution provides itself with virtual video memory. Instead of treating shaders as unique entities of memory as has been done in the past, treat them just like everything else and split them up into pages too. Assuming the graphics processor has enough instruction slots to execute an entire page of shader instructions, you then just execute shaders as a series of pages – load in shader page 1, execute shader, pause execution while the next page is loaded in, resume execution, and so on until the entire program has been executed. Instruction slots then become simply a L1 instruction cache, and everything starts working as it does with general-purpose processors.

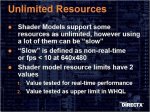

Virtual Video Memory is what allows DirectX Next to claim “unlimited resourcesâ€, since there’s no longer any bound on the length of shaders, or the amount of texture memory the user can consume as long as everything fits into the graphics processor’s virtual address space. There is, however, always going to be a practical limit to these resources. For shaders, whenever a shader is longer than can fit into the graphics processor’s L1 cache, performance is going to take a relatively sharp dive as there’s now going to have to be quite a bit of loading and unloading of pages, along with the waiting in between those stages, and all of this has to be done with the shading of each pixel. Likewise, there will be a relatively fast degradation of performance when textures start spilling over to system memory or, worse, hard disk space. To this end, Microsoft is including two new caps values for resource limits – one for an absolute maximum past which things will cease to work properly, and one for a practical limit past which applications will cease to operate in real-time (defined as 10fps at 640x480).