General I/O Model

There are a number of interesting consequences to a unified shading model, some of which may not be immediately apparent. The most obvious addition is, of course, the ability to do texturing inside the vertex shader, and this is especially important for general-purpose displacement mapping, yet it need not be limited to that. A slightly less obvious addition is the ability to write directly to a vertex buffer from the vertex shader, allowing the caching of results for later passes. This is especially important when using higher-order surfaces and displacement mapping, allowing you to tessellate and displace the model once, store the results in a video memory vertex buffer, and simply do a lookup in all later passes.

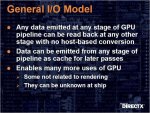

But perhaps the most significant addition comes when you combine these two together with the virtual video memory mindset – with virtual video memory, writing to and reading from a texture becomes pretty much identical to writing to or reading from any other block of memory (ignoring filtering, that is). With this bit of insight, the General I/O Model of DirectX Next was born – you can now write any data you need to memory to be read back at any other stage of the pipeline, or even at a later pass. This data need not be a vertex, pixel, or any other graphics centric data – given access to both the current index and vertex buffer you could conceivably use this system to generate connectivity information for determining silhouette edges. In fact, you should be able to generate all shadow volumes, for all lights completely on the GPU in a single pass, and then store each in memory to be rendered in latter passes (along with the lights associated with the volumes), but there’s just one little problem with that – outside of tessellation, today’s graphics processors can not create new triangles.

Topology Processor

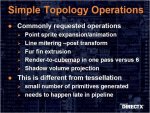

Actually, today’s graphics processors can create new triangles and, in fact, must do so in cases where line or point sprite primitives are used. Most consumer graphics processors are only capable of rasterizing triangles, which means all lines and point sprites must, at some point, be converted to triangles. Since both a line and point sprite will end up turning into two triangles, which can be anywhere from two to six times as many vertices (depending on the indexing method), it’s best if this is done as late as possible. This is beneficial because, at the essence, these are the exact same operations required for shadow volumes. All that’s required is to make this section of the pipeline programmable and a whole set of previously blocked scenarios become possible without relying on the host processor; Microsoft calls this the “Topology Processorâ€, and it should allow shadow volume and fur fin extrusions to be done completely on the graphics processor, along with proper line mitering, point sprite expansion and, apparently, single pass render-to-cubemap.

Logically, the topology processor is separate from the tessellation unit. It is conceivably possible, however, that a properly designed programmable primitive processor could be used for both sets of operations.

Tessellator Enhancements

Higher-order surfaces were first introduced to DirectX in version 8, and at first a lot of hardware supported them (nVidia in the form of RT-Patches, ATI in the form of N-Patches), but they were so limited and such a pain to use that very few developers took any interest in them at all. Consequently, all the major hardware vendors dropped support for higher-order surfaces and all was right in the world; until, that is, DirectX 9 came about with adaptive tessellation and displacement mapping. Higher-order surfaces were still a real pain to use, and were still very limited, but displacement mapping was cool enough to overlook those problems, and several developers started taking interest. Unfortunately, hardware vendors had already dropped support for higher-order surfaces so even those developers that took interest in displacement mapping were forced to abandon it due to a lack of hardware support. To be fair, the initial implementation of displacement mapping was a bit Matrox centric, so it is really of no great surprise that there isn’t too much hardware out there that supports it (even Matrox dropped support). With pixel and vertex shader 3.0 hardware on its way, hopefully things will start to pick back up in the higher-order surface and displacement mapping realm, but there’s still the problem of all current DirectX higher-order surface formulations limitations.

It’d be great if hardware would simply directly support all the common higher-order surface formulations, such as Catmull-Rom, Bezier, and B-Splines, subdivision surfaces, all the conics, and the rational versions of everything. It’d be even better if all of these could be adaptively tessellated. If DirectX supported all of these higher-order surfaces, there wouldn’t be much left in the way to stop them from being used – you could import higher-order surface meshes directly from your favorite digital content creation application without all the problems of the current system. Thankfully, this is exactly what Microsoft is doing for DirectX Next. Combined with displacement mapping, and the new topology processor, and there’s no longer any real reason not to use these features (assuming, of course, that the hardware supports it).