A (brief) history of the graphics chip: from VGA to programmable, general-purpose streaming processor

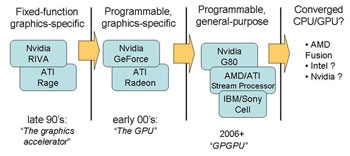

We should have all seen the blurring of the the line coming several years ago. Over time, graphics chips have made the jump from implementing IBM specifications (e.g. VGA), then to fixed-function pipelines that built in gates the OpenGL rendering pipeline and/or Direct3D's, and on to programmable rendering pipelines.

Once media processing went programmable, the writing was on the wall. Not everyone read that writing, but a few vendors and a few users did. There are always a few users out there desperate for as much floating-point power as they can find to tackle daunting computation problems, and a few couldn't help but try and cobble together schemes exploiting GPU floating-point power for non-graphics, streaming-type tasks. The GPGPU movement was born.

Some forward thinkers among GPU vendors not only paid attention to this nascent GPGPU trend, but also began contemplating bigger issues like how they could even ensure their own existence in future computing platforms. If your name was ATI or Nvidia, not to mention a host of ex-graphics chip makers no longer in the business, you were constantly looking over your shoulder looking out for CPUs doing more media processing in software or other peripheral chips - most notably the Intel architecture's north bridge - taking on graphics rendering hardware. It's neither ATI/AMD nor Nvidia but Intel that's the biggest provider of graphics hardware on the planet today. That, despite the fact that Intel has failed more than once at building a competitive, discrete graphics chip.

So what's a forward thinking graphics vendor that needs to reserve its place at tomorrow's computing table going to do? Well, take a page from GPGPU and push its hardware beyond graphics and into the general-computing realm owned by Intel and AMD. That's precisely what the company has started to do, taking its biggest step in that direction with late '06's G80.

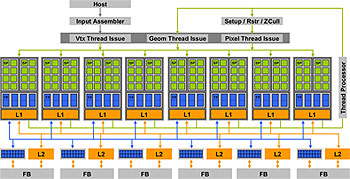

Look at the G80 microarchitecture, and there's scarcely a mention of anything that is graphics-specific. The bulk of the chip consists of 128 parallel stream processors, not vertex engines or pixel shaders but stream processors (SPs).The streams they processed can be vertices and pixels, but there's no longer such a limitation. With the G80, Nvidia also delivered CUDA (for Compute Unified Device Architecture), a new programming environment designed and optimized for general-purpose computing.

By the time the G80 and CUDA appeared, the wheels had been in motion at ATI as well, though first manifested after the G80 when the company's Stream Computing initiative and first Stream Processor. And don't forget the other big player in mainstream 3D computing, Sony/IBM, whose Cell technology for (among other things) the Playstation 3 is at its core a general-purpose floating-point intensive, stream processing solution.

AMD and Intel were of course paying attention as well. Neither wanted to get caught looking the other way as GPGPU takes hold and threatens their own bread and butter business. Intel had GPU expertise in house and could start laying the groundwork for its own future plans in this new-look computing landscape. AMD didn't, so it went out and bought ATI. The company has already announced its Fusion program, blending GPU and CPU into one converged, or hybrid, super-chip.

From VGA to programmable GPU to GPGPU and - now it's beginning to appear - on to a converged general-purpose platform. Driven hard by PC gaming, graphics has been at the forefront of this evolution, but other types of media acceleration like video codecs aren't going to be impervious to this trend.

So what about those making a living not off GPUs and CPUs, but video and image processing chips? Is now finally the time for programmable media processors to capture the broader market the way programmable GPUs have?