Q&A with Visceral's Technical Art Director Doug Brooks on Dead Space 2 - Page 2

Published on 16th Mar 2011, written by Alex G for Games Development - Last updated: 15th Mar 2011

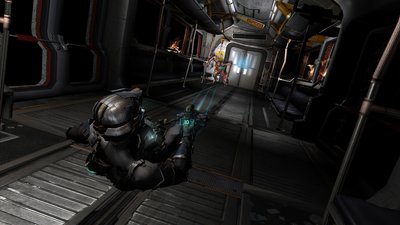

Graphics

Beyond3D: Hi Doug, just for the readers, would you please describe your job title and work at Visceral Games? How long have you been there?

My official title is Technical Art Director and I lead a small group of folks who are skilled at creating both art and code. I’ve been at EA for a little more than six years. Highlights of my career include working on Descent 1-3, Red Faction 2, Dead Space and Dead Space 2.

Beyond3D: Just to backtrack a little, I understand that Dead Space was actually first prototyped on the Xbox (in 2004-2005?). Did the prototyping have much influence over the graphics engine? How far along was the prototype when it was decided to become a title for the next wave of consoles? Was the engine built from scratch or was it based mostly off of a previous title?

One of our biggest philosophies is to continually show amazing things. This was never truer that the early days of Dead Space. We were a small group with limited funding and we knew that one mistake could mean the end of the project. We had no next-gen. tools and it was going to be a while before any showed up. It made sense to use Xbox for R and D while our engineers began work getting our engine running on X360 and PS3. Our team had finished work on the Bond franchise so we used its engine and toolset as a starting point.

Prototyping was one of the smartest things we did and those projects influenced every part of what the franchise is today. Zero g, stasis, dismemberment, HUD, and visual look were all things that begin as white boxes in the From Russia with Love engine.

I am pretty sure that Dead Space was always planned as a next gen title but we needed the time for our engineers to bring our Bond and Godfather tools to the new consoles. As this transition was made, we made significant changes to the engine and it became a worthy next gen content tool.

Beyond3D: Each of the three platforms have their advantages and disadvantages, so how was this approached for platform parity? To illustrate the question, in Dead Space 1, we could notice some very slight differences with the PlayStation 3 version compared to the PC/ Xbox 360 versions – maybe a bug in shadows resulting in banding and an apparent flipping of the normals for various normal mapped textures. Dead Space 2 seems perfectly equal between the consoles.

Not having parity makes the artist’s job a lot more time consuming and folks complain that your game is somehow better on other systems. As such, there is a huge effort on our team to stay as close to parity as possible. We generally try to make sure all new features work for both consoles before we release to the team for use. We also monitor the game’s performance at every step of the level’s creation and make sure all target devices hold at a reasonable 30 fps and take action when differences are found.

Beyond3D: For Dead Space 1&2, how is the rendering pipeline setup? I believe Mr. Engels had listed Dead Space among the games that used a variant of Light Pre-Pass deferred lighting. How was this decided upon for the PC/PS3/360 as opposed to going with fully deferred shading?

In an early test, we re-skinned the Godfather character as Isaac and got him marching around in a small level to examine lighting and look. The level was built to support lots of lights with moving shadows. We used creative tricks with triggers that turned them off and moved them around the scene. Eventually, we came to something indicative of the look we wanted but it seemed daunting with the tech that existed at the studio. Around the same time we also started hearing about deferred lighting and it seemed like a good fit for our problem. It was such a new thing then that we weren’t sure it could work but it promised more real time lights and that’s what we needed. The results of the deferred lighting tests were strong enough that we eventually just stayed with that technique and didn’t look at many other options once it was decided. It was a complicated time because we were also making the game work on next gen and our rendering engineer resources were very limited.

Beyond3D: Whilst deferred lighting allows the use of many more lights than a traditional forward, multi-pass renderer, it does pose some limitations on material/surface lighting. How was this handled for both games?

Deferred Lighting does a poor job with transparency. At one point, we noticed a severe drop in performance and found out that our old-school eye lashes were costing more than anything on screen. We often used cheaper shaders that faked reality to get around issues. Our glass, for example, doesn’t take lighting at all. Early in the project, we experimented with heavier glass shader but they proved to be just too much. I still have nightmares about 5 layers of full screen refractive glass in the decontamination room. It looked awesome, but played very poorly.

Beyond3D: Another caveat to using a deferred renderer is the loss of hardware alpha blending, requiring a separate render pass. Was this particularly costly for Dead Space 1 & 2? For instance, in some scenes there appeared to be quite an abundance of smoke effects that filled the screen, yet performance held up quite well on console and there was little to no sign of low-resolution artefacts from having used a lower res alpha buffer.

We have suffered from fill rate issues so we implemented a set of performance gauges in the engine. We also have the luxury of having an amazing vfx system that allows artist driven performance choices like an option to ignore deferred lighting. We really saw the major issues coming from the depth buffer since each group had a shared contribution to it and accountability was vague.