More Graphics

Beyond3D: MLAA seems to be making the rounds with Sony titles, AMD hardware on PC, and to some extent on 360. During the development of Dead Space 2, were more complex post-process edge filters considered?

We had a really cheap filter in place on DS1 just to get past the console requirements. On DS2 the requirements went away and so did the AA. It all comes down to milliseconds, you only have so many and a good AA technique meant less of something else. I’ve come to realize that the trade for deferred lights is worth it.

Beyond3D: What are some of the challenges in lighting the levels in both games? I couldn’t help but notice that many of the placed lights did not overlap, for instance. (Maybe a couple screenshots of the editor or tools?)

Since our lighting is collected in a buffer before it’s combined with geometry, I find it helpful to think of the lighting pass as a full screen image. Each lighting volume writes pixels in that image and more pixels mean more time to compute lighting. Large full screen lights are one way of killing performance but so is the stacking of many small lights. We’re diligent about hiding lights when moving from area to area and often need to do clever tricks with triggers to hide lights as the player traverses the level.

Generally, the lighting is very intuitive process with a real time preview and live-update of the major lighting in a scene. Tweaks are instantaneous and it’s a very fast to iterate with our tools. We also have very high lighting budgets in the game and we rely heavily on in-game performance graphs to keep things in check.

Beyond3D: Was HDR lighting implemented? How was that approached between the three platforms? What lighting model was used, and does that include a baked component (ala Global Illumination)?

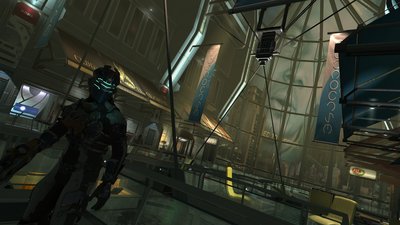

We evaluated HDR lighting at the start of Dead Space and again at the start of DS2 but chose to not to use it because the performance and memory hit is extreme. Our game is very dark and moody and hides the lack of HDR well. I can only think of one point in the production that I really wished we had a greater dynamic range; the original concepts for the Mall had harsh noon-day lighting and we found it extremely hard to do convincingly. Eventually we decided to change the time of day and work with our tools instead of fighting them.

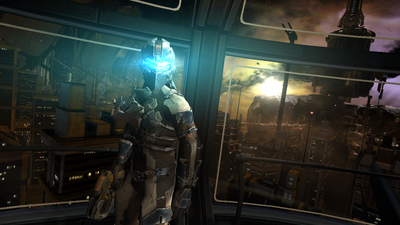

We expect that every level in the game has baked ambient occlusion. We prefer real time lights but there are several vistas in the game that use other types of baked maps as well. The large draw distances of sprawl are done with combination of lightmapped geometry and a technique I came up with that uses low res geo mapped with highres renders out of mental ray/maya.

Beyond3D: In the Unitology Church, there is a section with a multitude of cryogenic coffins, where the bodies inside didn’t look quite modeled as polygons. Was this a parallax effect or were they indeed modeled, considering that some of them could explode to reveal necromorphs instead? Likewise, the corruption/creep appeared to have a translucent layering to it, and I was wondering if that was also a parallax effect. It reminded me of a couple other games that extended parallax mapping to render fake interiors of building windows (e.g. Crackdown and Halo ODST).

Both the crypt and corruption shaders have parallax mapping components and both forced us to come up with some pretty inventive techniques to make them work. Corruption, for example, reveals underlying layers as light hits it and it also has snake like bulging motions that are rendered in an off screen buffer. We also scale the movement’s contribution with distance so the effect is readable from farther away. Shaders like these, however, cannot receive internal shadows and can be hard to blend into the scene so both took a fairly long time for us to get right.

Beyond3D: Was it an artistic or performance decision to limit the motion blur in both games to extremely fast objects like necromorphs attacks or to non-playable, cinematic sequences? How was it implemented?

Our motion blur is placed by vfx artists and is something we inherited from one of the other projects in the studio. The twitcher from Dead Space comes to mind as something that really needed the blur. He looked comical until we added it and it really sold his fast stuttering motions.

Beyond3D: Were there many changes in the graphics rendering between the two games? The character lighting seemed to be improved, although in a subtle manner. Also, there are much bigger outdoor areas in comparison to the first game – was that a trivial extension?

We are always working on the engine, but for DS2 we targeted specific graphical features that were driven by concept art and the needs of the game. I think the difference you see between the two games has more to do with us learning how to better use our tools. We adopted new techniques for building our human characters which improved the quality of both the mesh and texture maps. We did make subtle changes to character shaders but nothing significant.

The large outdoor areas were much a trickier prospect. One day, our art director casually asked me to take on making the station outside. We had concept art and there was geometry out the window but what had been placed in game relied heavily on dense fog and bright windows to sell the shots. I had no idea at the time that the desire was to kill the fog and draw a giant space station from wildly varying vantages. I spent several months coming up with a plan and tools and then many months building and rendering much of the final station in the game. I ultimately settled on a technique that used renders of high res geometry and I wrote tools to place these images on 3d cards in the world. Actually, I’m pretty proud of the results, the distant sprawl in the Halo jump portion of the game is something like 1600 polys.

Beyond3D: What other shadow techniques were evaluated (e.g. Stencil shadows, Variance Shadow Maps)?

We read the papers and talked with other groups in EA using techniques like Variance Shadow Maps but did not actively test then in the game.

Beyond3D: What were the texture resolutions and polygon numbers like for the main characters and necromorphs? How much memory was allocated to them including the environment bearing in mind the heavy amounts of streaming?

Isaac has several different suits that are generally around 25.000 faces and has 3-4 sets of 1024 and 512k maps. A typical necromorph can be anywhere from 3,000 to 15,000 polygons since they range in size and variety; we try to use only 2 sets of maps on them. These numbers are high because both Isaac and the Necromorphs can be dismembered at any moment and we need undamaged and damaged states around for when that happens. As implied we need a lot for the characters and use about 7300kB for character geo at any given moment. Textures take 35-40 megs.

Beyond3D: During my time spent playing DS2 on console, it seemed like it had a decent amount of texture filtering. Anisotropic filtering is one of those features that seems to be rarely used in console games. What are the performance implications on consoles, and how do you determine whether or not it should be used?

I once found an entire room in the game tagged as aniso. Our artists had secretly started using that flag because it looked better. Unfortunately doing an entire room was way too much and we removed it. I recommend it for use on large flat surfaces that are perpendicular to the camera –think streets or floors. I’ve never measured the cost but it doesn’t take much to feel its impact on the scene so we only use it on big win items.

Beyond3D: Late in the game, there is one very brief scene of what appear to be hundreds (or maybe thousands of necromorphs?). How was this achieved within the performance budget on consoles? What was the most taxing part about the implementation? And was there some Level-Of-Detail trickery considering how far away they were?

They are all low res particles. This shot was all done with the magic of vfx, using some of the tricks team members learned while working on Lord of the Rings games. The original concept art showed a much closer camera view which would have been vastly harder.

Beyond3D: When an Infector successfully infects a host, what is involved during the transformation, and what are the implied limitations for the modeling? The intro to Dead Space 2 has to be the most elaborate transformation I’ve seen in recent memory.

The transformation in the intro is quite different than our in-game infector transformations. We handle in-game transformations by hiding the models with a lot of vfx while we swap in new characters. The intro’s transformation was a much bigger deal. The goal from production was to show an extremely close up morph using existing tech in the engine. We didn’t have robust blend-shapes so this shot was animated entire with bones on a special character that had cuts built in and that where hand-animated to unwrap.

Beyond3D: Going forward, how do you feel about recent efforts for real-time global illumination (such as Crytek’s Light Propagation Volumes) compared to a baked approach (à la Geomeric’s Enlighten) considering that there are many dynamic objects in Dead Space and that lighting is very much a central character?

I’d love for everything to eventually be in real time but I see a hybrid approach being the most common scenario for a while. I would never give up real time lighting of objects that are close to the camera but I’d like to explore baking indirect illumination or using dual lightmaps when a next generation of consoles comes along. I view real time global illumination in the same vein as screen space ambient occlusion; it’s cool and can better the image, but it’s not going to look as good as something baked in mental ray.